Recent Posts

Integrating Web Services with Microsoft Excel and Google Sheets

The "Aha" Moment: How to Onboard an API Service and Get Active Users

Introducing Serverless Data Feeds

Share Data Without Sharing Credentials: Introducing Pipe-level Permissions

Lessons from the Data Ecosystem: Part 2

What We've Learned from Exploring the Data Ecosystem: Part 1

Improving Data Access: Getting Past Step One

Data Prep to Data Pipes: 5 Facets of a Data Project

Let a Thousand Data Silos Bloom

5 Steps to Faster Data Projects

Lessons from the Data Ecosystem: Part 2

In my previous post, I covered some recurring themes from our conversations with different user communities in the data ecosystem, which highlight common facets of data projects.

But different user communities also have their own distinct inclinations when it comes to working with data.

We’ve had the pleasure of talking with users from a range of communities and learning about their data projects. Although there’s some overlap, generally, you could categorize these groups as: 1) Enterprise IT professionals, 2) Data journalists, and 3) Full-stack and back-end Web developers.

Here are some insights we’ve gained from these user communities.

IT professionals: on the front lines of digital transformation

Going back to the start of Flex.io, our initial point of reference with data projects came from working with enterprise IT professionals.

One of the first things we noticed is that there’s a lot of push and pull in the collaboration between IT and stakeholders in other departments. In many cases, IT departments control one part of the workflow for data projects, but because the data science and data analytics happen at the departmental level, IT doesn’t manage the full process.

We’ve nicknamed this the “IT handoff.”

The involvement of different groups can make it extremely challenging to implement changes in how the data is processed in the overall project workflow. For instance, Isaac Sacolick, Global CIO at Greenwich Associates, observes in a great post on data integration:

As hard as it is to modify software, modifying a semi-automated (in other words, partially manual) data integration can be even more daunting even if the steps are documented. For example, fixing data quality issues or addressing boundary conditions tend to be undocumented steps performed by subject matter experts.

As a result, IT professionals are almost always juggling a lot of competing considerations. Beyond the specifics of a given data project, IT professionals need to worry about maintaining continuity with legacy data infrastructure, not breaking any existing systems or processes, as well as meeting the data-related needs of their organization. These competing goals often lead IT departments to keep a tight lid on data resources, but it also suggests it may be better to let a thousand data silos bloom.

And as Sacolick goes on to note, there’s often a lot at stake:

The complexity slows down IT, and if data and analytics is strategic to the business, it frustrates business leaders that they can’t just add a new data source … or strategically change the downstream analytics.

Ultimately, IT professionals are on the front lines of the digital transformation that’s disrupting their industries and radically changing their organizations. The central challenge for them is to stay ahead of the curve of this transformation by enabling their organizations to work with data more effectively.

Data journalists: questioning data and verifying results

Unlike IT professionals, data journalists typically don’t need to worry about supporting data infrastructure or meeting the data-related needs of a larger organization. In fact, as we learned from our summer research project, many of them work as freelancers or on small teams, sprinting through data projects with short life cycles in order to meet tight deadlines with their reporting.

However, because their work is public and subject to fact-checking, data journalists have a strong commitment to verifying the reliability of data sources and making sure they’re used accurately.

For data journalists, checking the data is an essential task from the start. As Alex Richards explains in his interview:

I try to figure out what’s wrong with [the data]. Because there’s always something wrong with it. … I’m trying to figure out if the data is an accurate representation of reality, which is not always the case.

This is a common thread with other data journalists as well. We hear a lot about the many ways that data can be misunderstood or misused. For instance, Daniel Hertz points out that it’s all too easy to end up with an unworkable margin of error when analyzing Census data. And Alden Loury cautions that missing records and gaps in a data set can invalidate how it’s used.

Given their commitment to verifying data sources, perhaps it’s not surprising many data journalists rely on open data, and advocate publishing the analytic results and methodology for their data projects.

Open data provides transparency about where the statistics and conclusions for a data project come from and allows anyone to go to the source to check whether the data is being used correctly. Like data journalism itself, open data serves as a public resource.

Developers: cutting waste and streamlining code

More recently, we’ve benefited a lot from input and feedback from developers who work primarily on Web applications and less often on data projects. For the most part, these have been back-end or full-stack developers on the lookout for Web services that can help them cut large items from their development to-do list.

One of the things we appreciate most about developers is their endless drive for efficiency. Generally, developers always love a faster and more direct way to do things, whether it’s civic hackers coding away at Chi Hack Night or an astrophysicist from GitHub sharing tips on removing friction from open source projects at a meetup group.

Developers bring this same approach to data projects.

For instance, in our conversation with Chris Groskopf, he mentions that a key reason he built CSV kit is to process CSV data more easily:

With CSV kit, that was designed to solve a problem of constantly doing the same thing over and over again and it taking too long every time. I can write Python code to cut columns out of a CSV file and it won’t take me that long to do that, but I’m writing custom code every time.

Making data projects more efficient also addresses one of the most annoying problems developers have – distractions that break their concentration and take away their focus on writing code. The classic graph, “a day in the life of a programmer,” illustrates this brilliantly:

Unfortunately, data projects typically involve too many meetings, require too much back-and-forth between different groups and take far too long. These “data-driven disruptions” happen every time a developer has to answer an email from an analytic end-user asking for clarification about what a data set means or for help setting up a data feed.

And business leaders wonder why critical updates to their applications perpetually fall behind schedule.

The good news is that streamlining the steps in data projects is a lot like streamlining the process of writing code. As developers bring their drive for efficiency to data projects, they not only save themselves time and effort but also improve the agility of analytic teams and increase their capacity to work with data.

Recent Posts

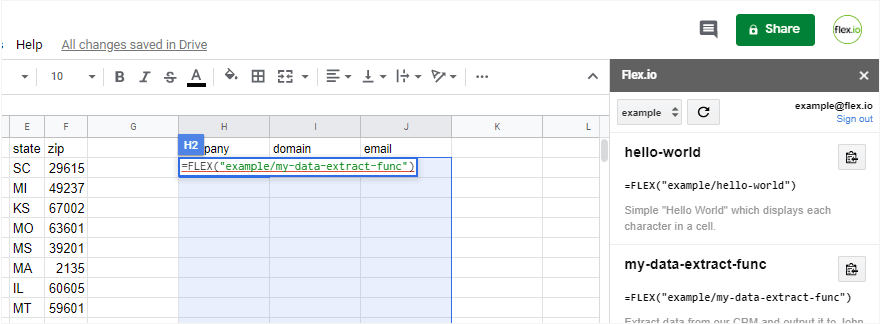

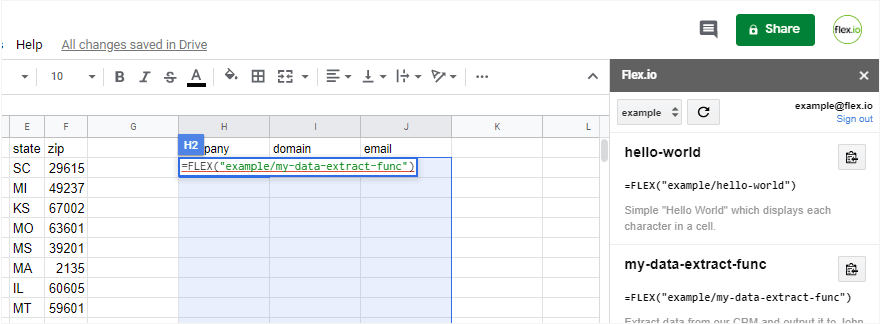

Integrating Web Services with Microsoft Excel and Google Sheets

The "Aha" Moment: How to Onboard an API Service and Get Active Users

Introducing Serverless Data Feeds

Share Data Without Sharing Credentials: Introducing Pipe-level Permissions

Lessons from the Data Ecosystem: Part 2